MUMBAI, 21 NOVEMBER, 2023 (GPN): Even as the Delhi Police registered an

FIR at the Special Cell police station

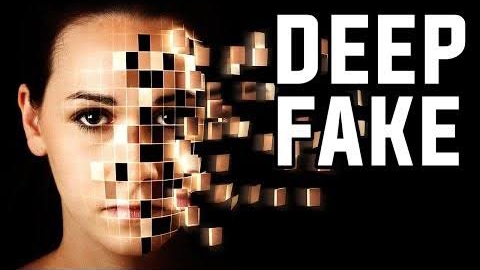

in connection with the deepfake AI-generated video of actor Rashmika

Mandanna, the questions being asked are, how were these deepfakes created and is there a way to protect oneself?

Srijan Kumar, a computer scientist and assistant professor at Georgia Institute of Technology, says generative AI models have reduced the cost of producing high-quality content, both good and bad.

“Now, bad actors can easily create realistic-looking,but entirely fabricated, misinformative content,” he points out. AI digital tools enable manipulation

on a previously unimaginable scale.

“There have always been fake images, but now they can now instantly target a

huge number of potential victims in a short amount of time,” says Srijan.

Diffusion models Making matters worse

is the Diffusion model, a new AI tool that allows tech-savvy predators to

simply exploit real-life photographs from the Internet, including shots

displayed on social media sites and personal blogs, and re-create them into

almost anything.

“These AI-generated visuals have

the ability to undermine any tracking systems that ban such content from

the web. Because of the system disturbance, determining whether an

image is real or created by AI is nearly impossible,” says Srijan, who was

honoured with the ‘Forbes 30 under 30’ award for his work on social medsafety and integrity.

Diffusion models are trained to generate unique images by learning

how to de-noise, or reconstruct. Depending on the prompt, a diffusion

model can create wildly imaginative pictures based on the statistical

properties of its training data within seconds.

“Unlike in the past, when individual faces had to be superimposed, not much technical knowledge is required now. The diffusion model may quickly generate many images with a single

command,” Srijan explains.

Stable diffusion

Stable Diffusion is the most adaptable AI picture generator and is completely open source. The deep generative

artificial neural network is believed to represent a substantial leap in image

synthesis, unlike previous text-to-image models such as DALL-E & Midjourney, which were only ava ilable through

cloud services.

Authorities in the West feel that Stable Diffusion is what unscrupulous people rely on to generate the pictures they desire. So, do we have detection

and mitigation solutions?

“There are several, but they are preliminary and do not account for the

many types of generated information,” says Srijan.

One major challenge is that as new detectors are developed, generative

technology evolves to incorporate methods to evade detection from

those detectors.

Exploiting the unwary “The majority of

deepfake on the internet is pornographic and exploits non-consenting women,” says Nirali

Bhatia, a Cyber Psychologist and TEDx

speaker recognised for studying online behaviour and treating victims of

cybercrime. “As the name implies, it is a forgery that can have serious

consequences for one’s reputation,” she says.

According to research, deepfakes have an impact on both the conscious and

subconscious minds. “Deepfake video victims typically face criticism and distrust. They have the potential to influence our perceptions of others

and cause trust issues,” she says, adding that a deepfake is a hyper- realistic digital version of a person that can be

manipulated into doing or saying anything.

Noting that deepfakes are increasingly being used to harass and intimidate public figures and activists, as well as

to harm those in business, entertainment and politics, Nirali says,

“AI is now making it difficult for us to

distinguish between the real and the fake. It is critical that we disregard

the adage ‘seeing is believing,’ because in cyberspace, you cannot believe what you see.”

Anand Mahindra shared an Artificial Intelligence-bgenerated (AI) deepfake

video to warn people about the dangers of such content.

In order to combat

deepfakes we must:

1 Educate ourselves

2 Verify the source

3 Use reverse image search

4 Cross-verify the video content with

reliable sources before letting it alter perception or make a judgment

“These AI-generated visuals have the ability to undermine any tracking

systems that ban such content from the web.Because of the system disturbance, determining whether an image is real

or created by AI is nearly impossible. The need of the hour is to create

defence tools, just like anti-virus and anti- malware software and deploy on end user devices.” Srijan Kumar,

computer scientist.

AI is now making it difficult for us to distinguish between the real and the fake. It is critical that we disregard

the adage ‘seeing is believing,’ because in cyberspace, you cannot believe what you see says Nirali Bhatia, Cyber Psychologist and TEDx speaker.Ends

Be the first to comment on "The deepfake threat: A Crisis"